AI Projects

Recommendation Systems

For a master's project, I built a recommendation system called RelRec. I trained multiple models and compared them. The winning algorithm used the Latent Dirichlet Allocation algorithm. The recommendation system was full IaC. It included a microservice pipeline to source documents, parse, sanitize, remove stop words, run algorithm, and compare benchmarks.

Research: https://scholarsarchive.byu.edu/etd/6195/

Code: http://linguistics.byu.edu/thesisdata/relrec.html (this page will be removed when Professor Lonsdale retires)

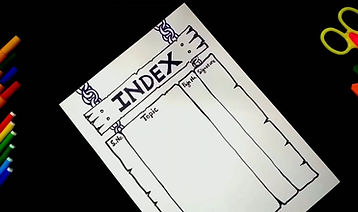

Data Indexing

Visuals are critical to understanding AI output. This project converted output from LDA (Latent Dirichlet Allocation) and presents it as an interactive, nested index. A key feature is the ability to load source documents to verify. This allows humans to determine if the index is robust or needs additional tweaking of parameters.

Classification: Is it actually candy?

Machine learning classifiers can help answer: Is this what it claims to be?

For nutritionists, this is a critical question for every food item. In this model, we demonstrated that a back-propagation model was sufficient to answer this while surpassing baseline performance expectations. Data was sourced from the FDA nutritional information database.

https://bean5.github.io/ml-food-classifier/

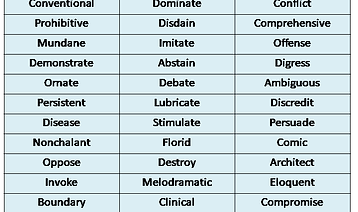

Human Training (Education)

Machine learning could one day help select optimal datasets for educational systems, but back in 2012, I set out to build a simpler tool: a command-line program for learning SAT vocabulary words.

I wrote it in Perl, a language often praised for its NLP capabilities, as both a way to sharpen my skills and to create something useful. Despite its simplicity—just 175 lines of code and under 1 MB in size—the tool delivers a practical learning experience. This project predates frameworks like TensorFlow, making its lightweight, command-line design a reflection of the era.

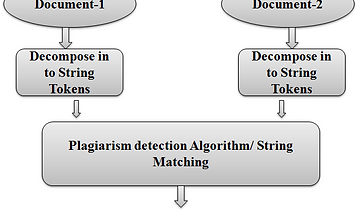

Plagiarism Detection with GUI

Goal: cross-platform paraphrase detection with user interface that can manipulate manipulate parameters on the fly. The result is a Java paraphrase detector that leverages n-gram/gene sequence alignment.

Data Analysis: Topics over Time

When you have a lot of documents spanning a lot of years, key questions invariably arise such as:

-

which topics are trending

-

which topics were trending 10 years ago

Using Topics Over Time using Gibbs sampling algorithms, I did exactly this. To read up on a model and conclusions, see Theological topics through time: An application of Gibbs sampling and other metrics to analyze topic venues in religious discourses

Regex

Regular expressions are a powerful way to uncover linguistic patterns in text, but some patterns are better captured with customizable automatons. After exploring this area through research, I developed a tool that detects alliteration in documents using orthographic cues.

Related to this is haiku detection.

Tooling

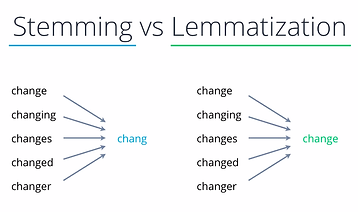

I upgraded a stemming tool to work with Java 7. It is over 11 years old. Being in Java, it probably still works. My code is still available, while the source code is no longer on GitHub.

I recommend tools like Python Natural Language ToolKit (NLTK) at this point. Not because they are better, but because they are more mainstream and therefore render code more re-usable and maintainable. Or use lemmatizers.

https://bean5.github.io/nlp-porter-stemmer-java/